Text Mining

USING TIDY DATA PRINCIPLES

Hello!

Let’s install some packages

What do we mean by tidy text?

text <- c("Tell all the truth but tell it slant —",

"Success in Circuit lies",

"Too bright for our infirm Delight",

"The Truth's superb surprise",

"As Lightning to the Children eased",

"With explanation kind",

"The Truth must dazzle gradually",

"Or every man be blind —")

text

#> [1] "Tell all the truth but tell it slant —"

#> [2] "Success in Circuit lies"

#> [3] "Too bright for our infirm Delight"

#> [4] "The Truth's superb surprise"

#> [5] "As Lightning to the Children eased"

#> [6] "With explanation kind"

#> [7] "The Truth must dazzle gradually"

#> [8] "Or every man be blind —"What do we mean by tidy text?

library(tidyverse)

text_df <- tibble(line = 1:8, text = text)

text_df

#> # A tibble: 8 × 2

#> line text

#> <int> <chr>

#> 1 1 Tell all the truth but tell it slant —

#> 2 2 Success in Circuit lies

#> 3 3 Too bright for our infirm Delight

#> 4 4 The Truth's superb surprise

#> 5 5 As Lightning to the Children eased

#> 6 6 With explanation kind

#> 7 7 The Truth must dazzle gradually

#> 8 8 Or every man be blind —What do we mean by tidy text?

Jane wants to know…

A tidy text dataset typically has

- more

- fewer

rows than the original, non-tidy text dataset.

Gathering more data

You can access the full text of many public domain works from Project Gutenberg using the gutenbergr package.

What book do you want to analyze today? 📖🥳📖

Time to tidy your text!

tidy_book <- full_text %>%

mutate(line = row_number()) %>%

unnest_tokens(word, text)

glimpse(tidy_book)

#> Rows: 127,996

#> Columns: 3

#> $ gutenberg_id <int> 1342, 1342, 1342, 1342, 1342, 1342, 1342, 1342, 1342, 134…

#> $ line <int> 1, 3, 3, 4, 6, 6, 6, 6, 7, 9, 9, 12, 14, 14, 14, 14, 14, …

#> $ word <chr> "illustration", "george", "allen", "publisher", "156", "c…What are the most common words?

What do you predict will happen if we run the following code? 🤔

What are the most common words?

What do you predict will happen if we run the following code? 🤔

Stop words

Stop words

Stop words

Stop words

Stop words

What are the most common words?

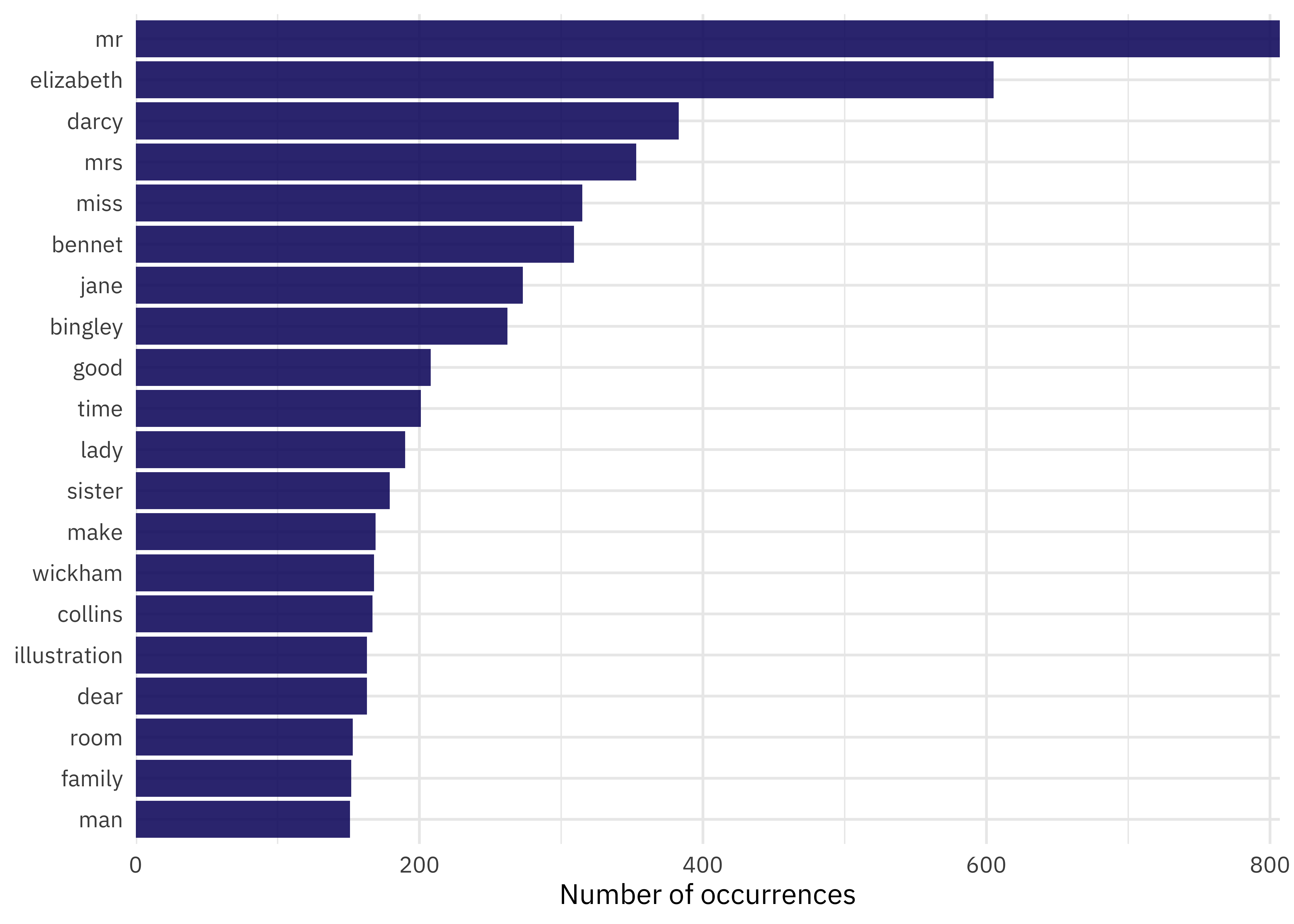

U N S C R A M B L E

anti_join(get_stopwords(source = “smart”)) %>%

tidy_book %>%

count(word, sort = TRUE) %>%

geom_col() +

slice_max(n, n = 20) %>%

ggplot(aes(n, fct_reorder(word, n))) +

What are the most common words?

SENTIMENT ANALYSIS

😄😢😠

Sentiment lexicons

Sentiment lexicons

get_sentiments("bing")

#> # A tibble: 6,786 × 2

#> word sentiment

#> <chr> <chr>

#> 1 2-faces negative

#> 2 abnormal negative

#> 3 abolish negative

#> 4 abominable negative

#> 5 abominably negative

#> 6 abominate negative

#> 7 abomination negative

#> 8 abort negative

#> 9 aborted negative

#> 10 aborts negative

#> # … with 6,776 more rowsSentiment lexicons

get_sentiments("nrc")

#> # A tibble: 13,872 × 2

#> word sentiment

#> <chr> <chr>

#> 1 abacus trust

#> 2 abandon fear

#> 3 abandon negative

#> 4 abandon sadness

#> 5 abandoned anger

#> 6 abandoned fear

#> 7 abandoned negative

#> 8 abandoned sadness

#> 9 abandonment anger

#> 10 abandonment fear

#> # … with 13,862 more rowsSentiment lexicons

get_sentiments("loughran")

#> # A tibble: 4,150 × 2

#> word sentiment

#> <chr> <chr>

#> 1 abandon negative

#> 2 abandoned negative

#> 3 abandoning negative

#> 4 abandonment negative

#> 5 abandonments negative

#> 6 abandons negative

#> 7 abdicated negative

#> 8 abdicates negative

#> 9 abdicating negative

#> 10 abdication negative

#> # … with 4,140 more rowsImplementing sentiment analysis

Jane wants to know…

What kind of join is appropriate for sentiment analysis?

- anti_join()

- full_join()

- outer_join()

- inner_join()

Implementing sentiment analysis

What do you predict will happen if we run the following code? 🤔

Implementing sentiment analysis

What do you predict will happen if we run the following code? 🤔

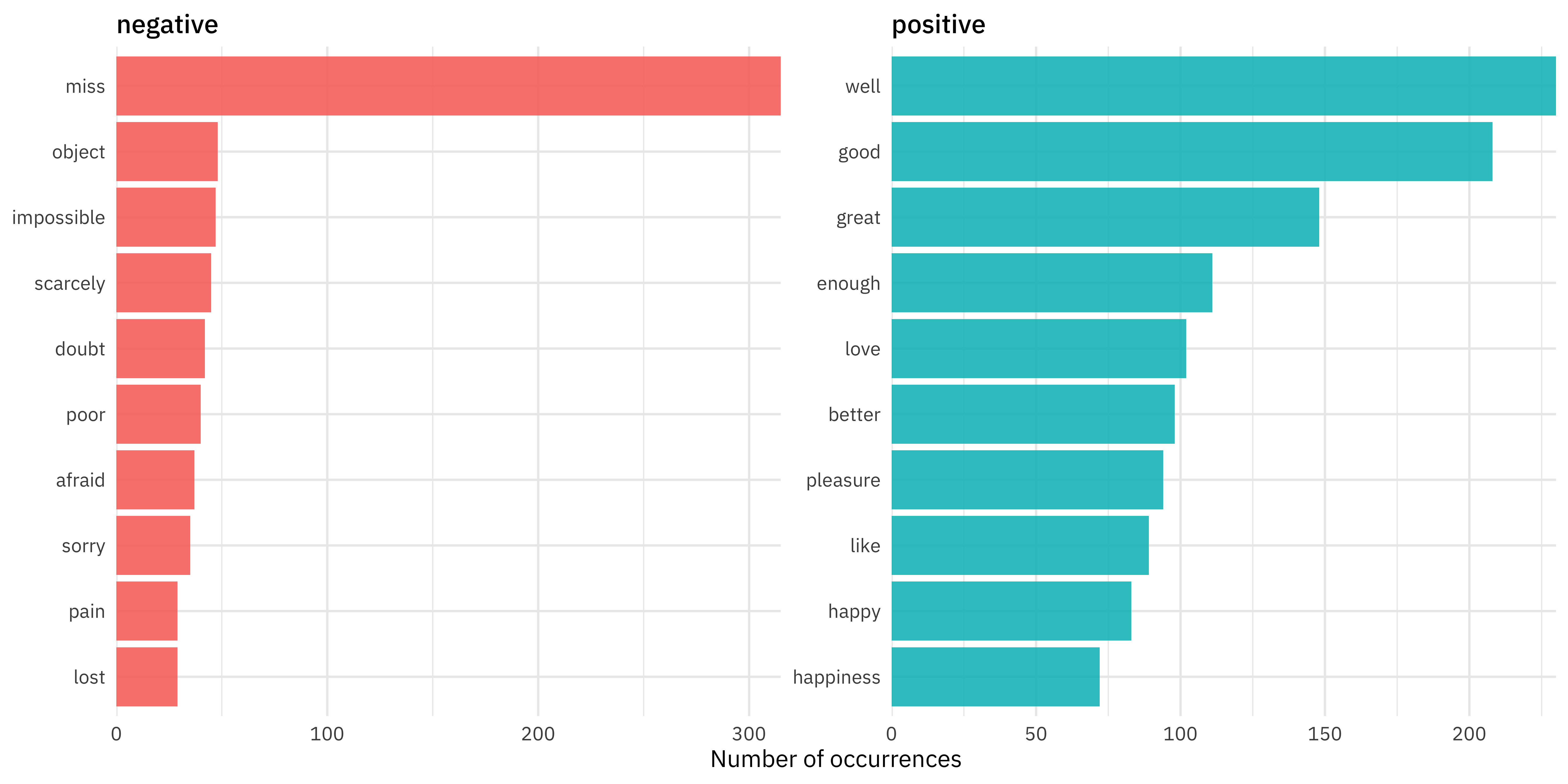

tidy_book %>%

inner_join(get_sentiments("bing")) %>%

count(sentiment, word, sort = TRUE)

#> # A tibble: 1,503 × 3

#> sentiment word n

#> <chr> <chr> <int>

#> 1 negative miss 315

#> 2 positive well 230

#> 3 positive good 208

#> 4 positive great 148

#> 5 positive enough 111

#> 6 positive love 102

#> 7 positive better 98

#> 8 positive pleasure 94

#> 9 positive like 89

#> 10 positive happy 83

#> # … with 1,493 more rowsImplementing sentiment analysis

Thanks!

Slides created with Quarto